Contents

Overview

The goal of this work in progress is to have a working automatic manipulation pipeline for the Shadow Robot Hardware. To do this, we're integrating our ROS interface with the Object Manipulation Stack.

It does the following actions:

Detection of a table and segmentation / recognition of the objects standing on it, using the Tabletop Object Perception stack. In this case we're using the Microsoft Kinect as a source of 3D data. This builds up a model of the environment.

Issue an order to grasp one of the objects via the GUI plugin.

- Dynamic approach, grasping, lifting and placing of the object in a zone defined by the user, while avoiding collisions with the environment.

Here is a screencast showing the whole process:

NB: There are still lots of things to refine in the current approach (grasping of unrecognized objects, checking if we have a good grasp on an object, etc...), but the main idea behind this implementation is that, thanks to the inherent modularity of ROS, everyone can work on getting a better functionality for a smaller part of the whole process (e.g. checking the grasp), while keeping the full pipeline working.

What is going on

Point Cloud Path

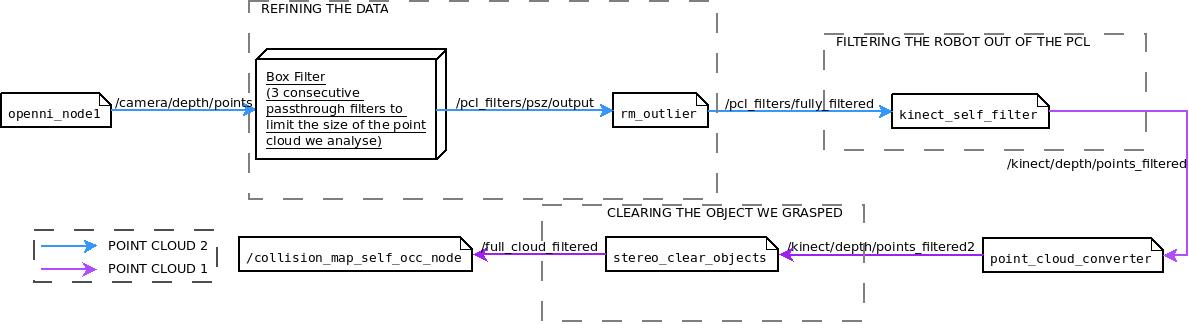

The point cloud published by the kinect on /camera/depth/points. You can see on the diagram below through which nodes the point cloud is transformed.

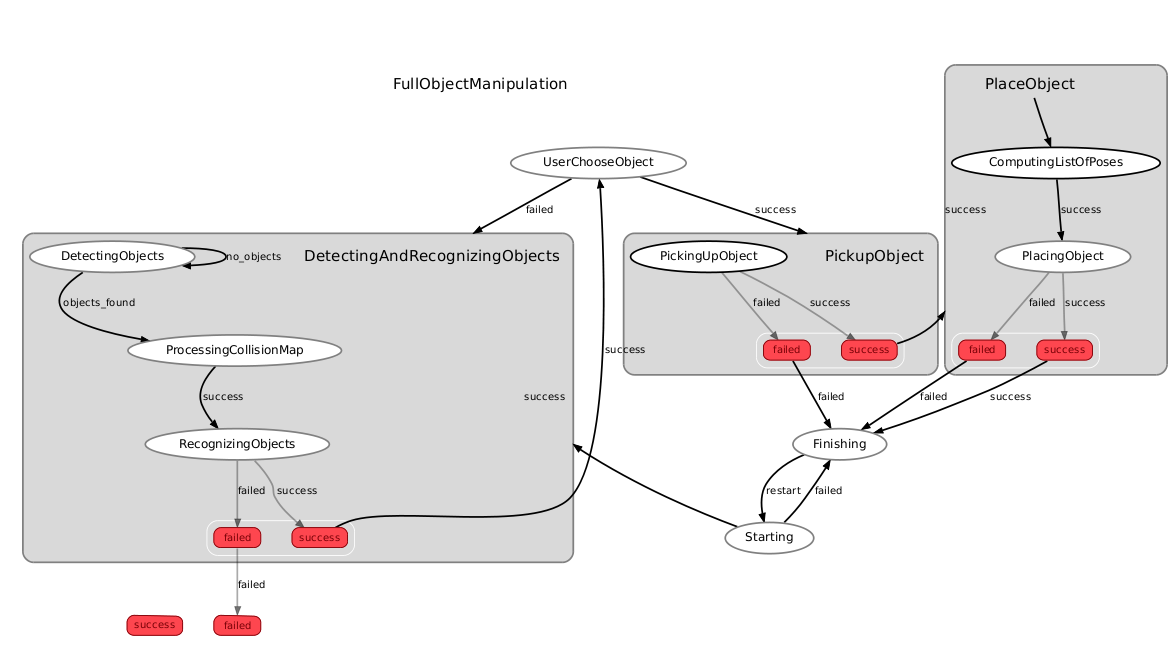

Below is a diagram of the state machine controlling the whole pick up and place process. You can run the state machine with the command :

$ rosrun sr_object_manipulation_smach sr_object_manipulation_smach_main.py

[UNSTABLE specific] Currently, the sr_object_manipulation launch files do not start the following required nodes.

openni (to get point cloud data)

tabletop_object_detector (patch required for 32bit systems see http://answers.ros.org/question/1399/tabletop_segmentation-fails-eigen-error)

household_objects_database (be sure you are using the latest household_database dump)

You should have them started manually before launching the main sr_object manipulation.launch or sr_object manipulation_smach_main.py

Install

The stack is now built on ROS Diamondback, with no overlays except openni for the Kinect. Please install the pr2-desktop variant of ROS Diamondback, to make sure you have all the necessary packages.

Once you have the Kinect working, you can download our code from the launchpad manipulation stack branch to any directory you choose (/code/ in our example):

$ cd /code/ $ bzr branch lp:~ugocupcic/sr-ros-interface/manipulation

You then need to add the path to your ROS_PACKAGE_PATH environment variable. If you source the ros setup.bash in your ~/.bashrc file, then edit ~/.bashrc and add the following line (assuming you downloaded the code to /code/):

export ROS_PACKAGE_PATH=/code/manipulation/shadow_robot:/code/manipulation/shadow_robot/sr_object_manipulation:${ROS_PACKAGE_PATH}Don't forget to source your .bashrc file, and then you can compile everything:

$ rosmake --rosdep-install sr_hand sr_grasp_planner sr_object_manipulation_launch sr_control_gui sr_mechanism_controllers sr_move_arm sr_tactile_sensors

If compile fails at some point, you may miss some packages. Try to add the not-yet-stable Object Manipulation Stack and the Tabletop-Object-Perception stack that provides tabletop_object_detector and tabletop_collision_map_processing.